AI-Generated Code at Enterprise Scale

Table of Contents

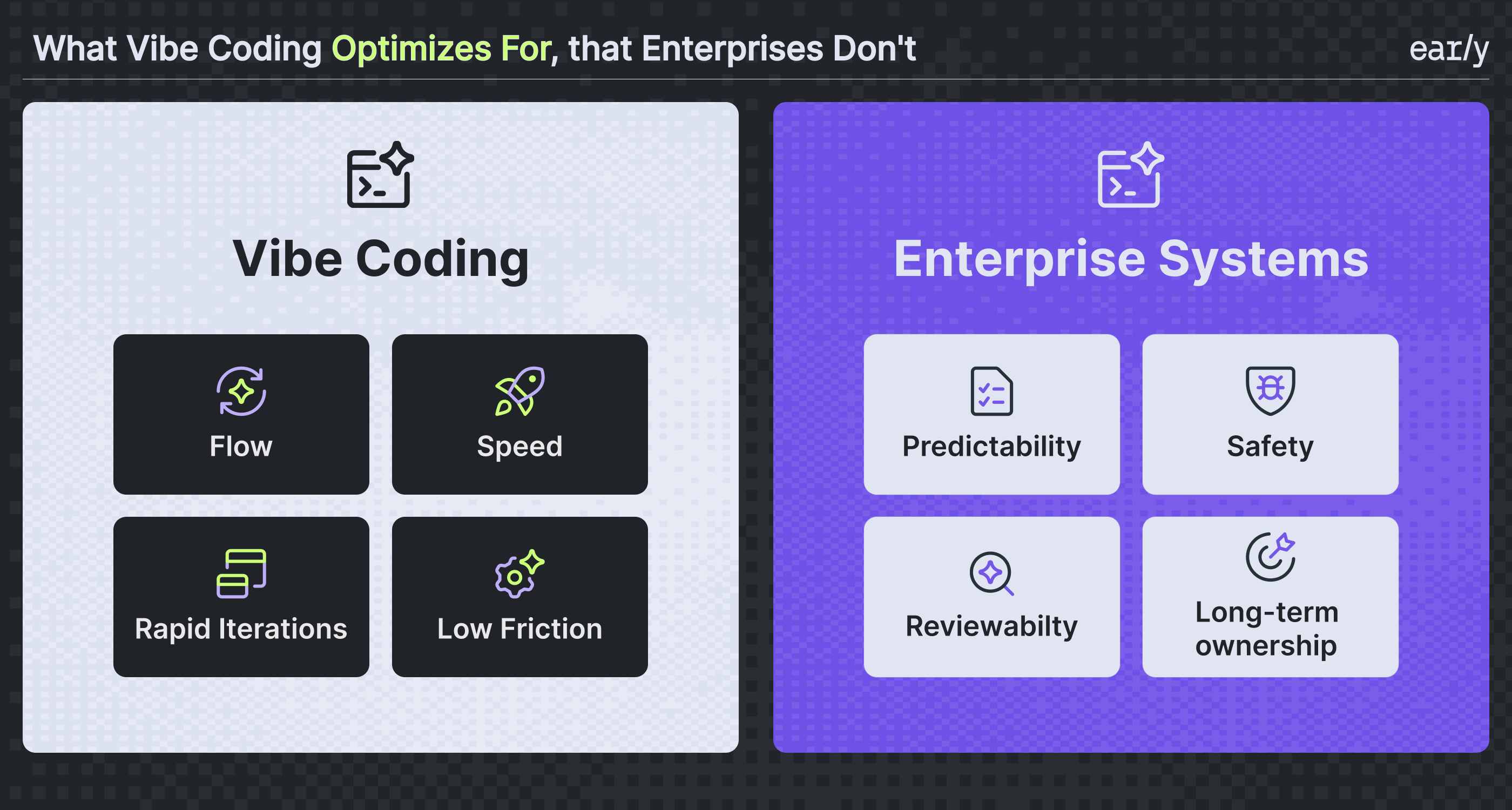

Vibe coding optimizes for momentum, staying in flow and generating code quickly.

Enterprise software optimizes for safety, where changes must be understandable, reviewable, and reliable over time.

Production systems carry invisible context that isn’t present during code generation. Even clean-looking output doesn’t always fit the system it’s introduced to.

That mismatch is where enterprise adoption begins to break down.

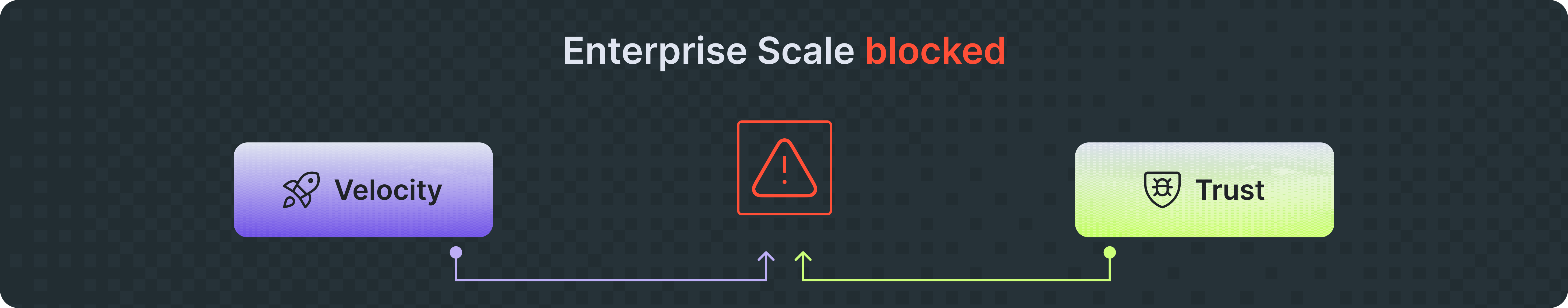

In enterprise environments, trust is not optional. It determines whether AI-generated code ever ships.

The issue isn’t the majority of code that looks good. It’s the hidden edge case, assumption, or subtle behavior change that can break production systems. At scale that level of uncertainty is unacceptable at scale.

Once teams recognize that risk, everything slows down. Reviews get heavier, changes take longer, and trust in the codebase erodes. Over time, AI-generated code gets pushed back to experiments and greenfield projects, where technical debt quietly accumulates.

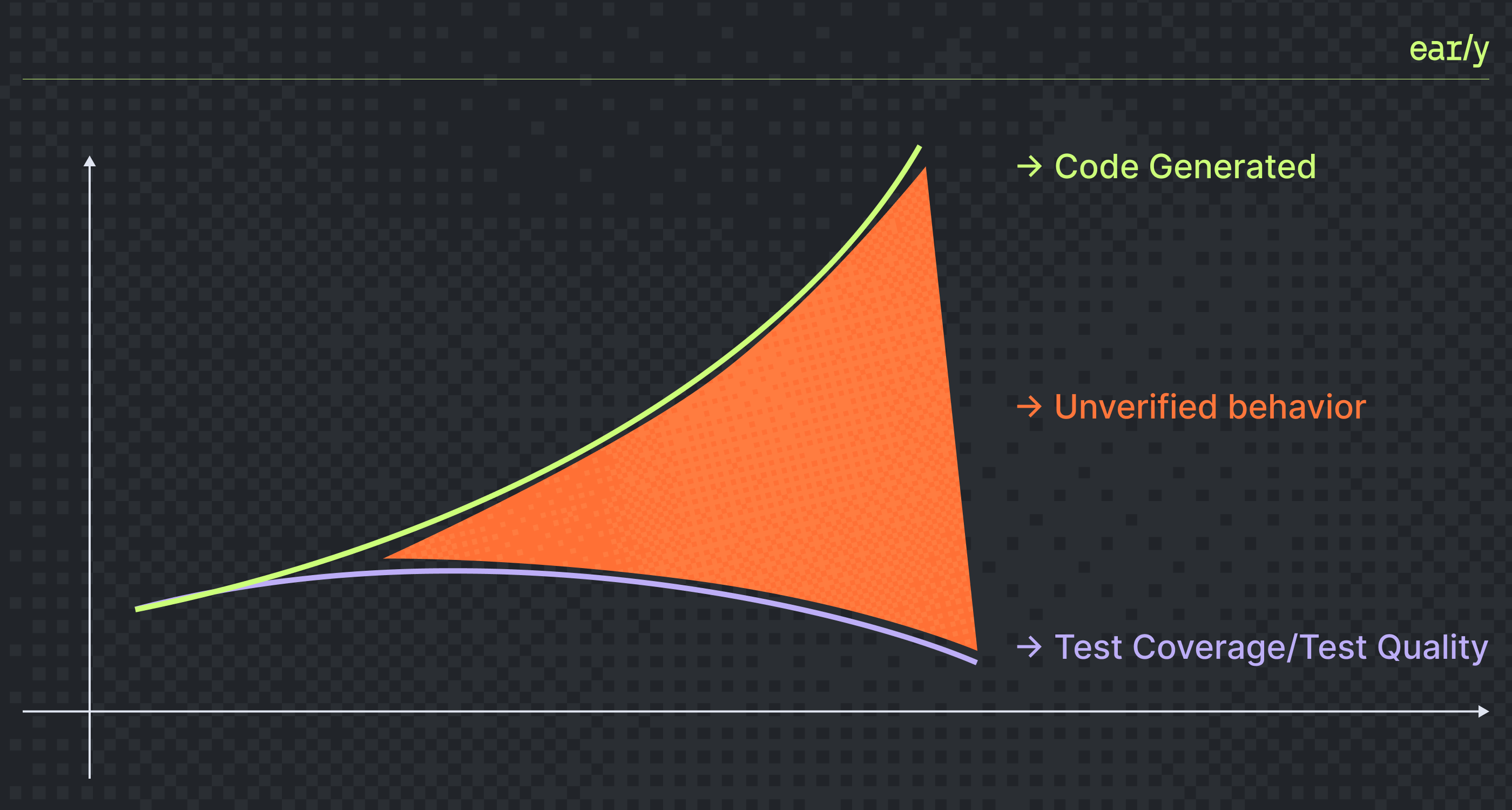

As AI adoption grows, code generation scales instantly. Testing does not.

AI-generated code introduces new behavior faster than most test strategies can adapt. Existing tests validate historical behavior, while generated tests often lack the context to meaningfully assert correctness.

The result is a growing gap between how fast code changes and how well it’s protected, even while builds stay green. As complexity increases, the most critical logic is often the least well-tested.

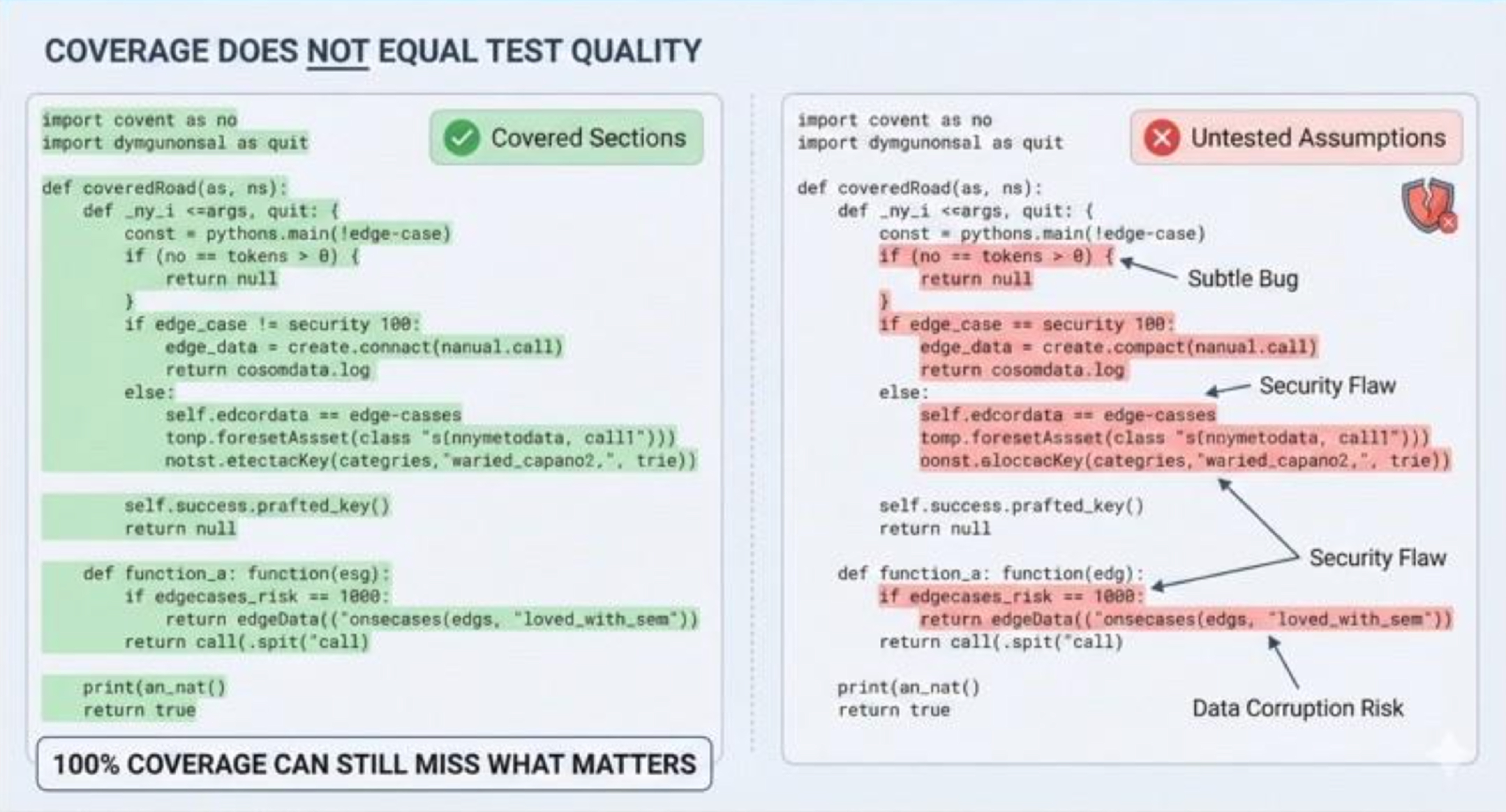

When risk increases, teams often look to coverage for reassurance.

Coverage shows what ran, not whether behavior, assumptions, or edge cases were validated. High coverage does not garentee trust.

With AI-generated code, large amounts of new logic can be added while coverage barely moves. Builds stay green, metrics look healthy, and risk accumulates quietly underneath.

Visual

For enterprises, the real cost doesn’t appear at merge time.

Over time, hidden complexity makes systems harder to understand and more difficult to change. New teams inherit behavior they didn’t design and tests that don’t clearly explain intent.

As trust drops, even small changes feel risky. Velocity slows, not because teams can’t move fast, but because they’re unsure what else might break.

Vibe coding unlocked speed and creativity. That mattered.

But enterprises don’t run on speed alone. They depend on trust, predictability, and the ability to change systems safely over time.

The next phase of AI-assisted development isn’t about generating more code.

It’s about making trust scale alongside it.

Test generation agents don’t replace engineering judgment

They make it scalable, exactly where change is risky and confidence matters most