Writing code is time-consuming, but manually writing unit tests for every function is even more exhausting.

Developers often spend about 25% of their time on testing activities, which includes writing and maintaining unit tests. We spend hours writing code, only to spend more time writing unit tests to ensure potential issues are caught before they reach production. You cannot skip the process because small mistakes can lead to major failures without proper unit test coverage. So, what do you do?

You need to adopt a better and more innovative approach. One that not only improves test coverage but also saves valuable time. An approach that ensures thorough test coverage without draining your time and energy. Here are 7 practical and effective ways to improve unit test coverage while ensuring your code remains reliable and maintainable.

What is unit test coverage?

Unit test coverage measures how much your code is executed when running tests. It helps identify untested parts of your application to ensure better code quality and maintainability.

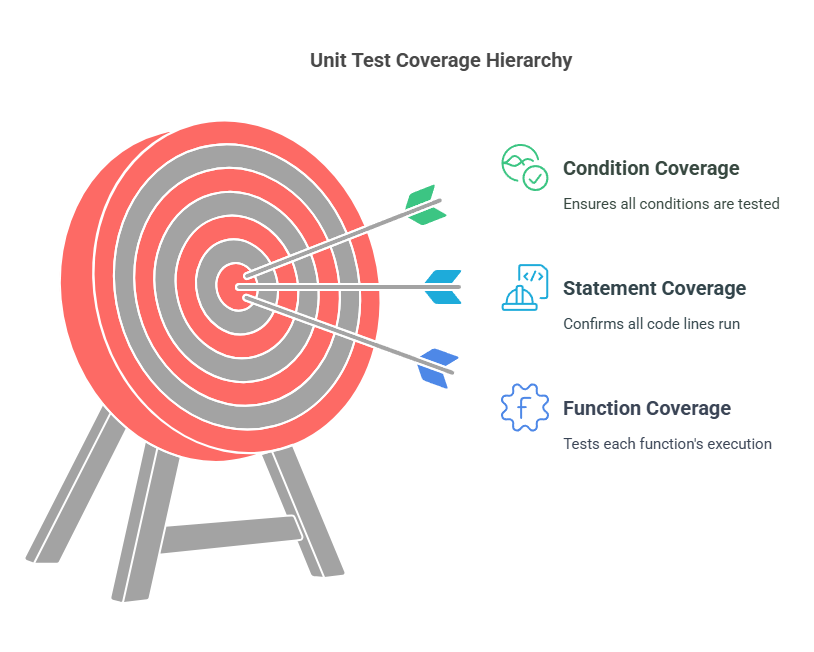

To write meaningful tests, understand the differences between function, statement, and condition coverage.

- Function coverage: Ensures each function is tested.

- Statement coverage: Confirms every line of code executes at least once.

- Condition coverage: Verifies that all boolean expressions are evaluated as both true and false.

Why unit test coverage matters

Unit test coverage matters because it helps prevent hidden bugs, reduces unexpected failures, makes your code easier to maintain, and improves software stability and reliability. When your tests cover the essentials, you spend less time fixing broken features and tracing where a particular issue might be coming from. Choosing the right unit testing tools makes that process far more efficient and sustainable.

Code changes also become easier to update. This means you can add new features and execute new code without fearing breaking something or slowing down processes.

Code coverage vs adequate coverage

Identifying how much of your code is tested and measuring it in percentages is excellent, but how effective is that code?

Take an e-commerce checkout system, for example. Your written tests might achieve 90% code coverage to verify a successful purchase using a valid credit card. The coverage looks great on paper, but does it mean the system is well-tested? Not really.

How does the system handle an expired card or insufficient funds? What if the payment gateway times out? What if customers get overcharged when using a particular payment method?

Adequate coverage is about testing the right things before they break. It ensures that critical edge cases are addressed and the right things are tested before moving to production.

The cost of poor coverage

It’s either organizations adopt an effective strategy to get everything right or pay the consequences when things go wrong.

You might have a payment system to calculate customer discounts. Yes, the codes work fine during testing, but one untested unit somewhere can lead to a significant issue. How? A first-time buyer can find a way to apply multiple discount codes, causing the final price to drop to zero.

Now, this customer is getting products for free - then they share it with their friends, and their friends share it with theirs, next thing you know the business is losing money through a network of customers exploiting the freebie glitch, and engineers are running helter-skelter to fix the issue.

AI-powered testing can bridge gaps.

Unit testing is essential—but it's also a massive time sink. Developers can’t afford to waste hours manually writing and updating tests, especially under tight sprint cycles and technical debt from backlogs.

This is where AI-powered testing tools come in. With agentic AI systems like EarlyAI, unit tests can be generated in seconds, not hours. These tools don’t just automate the boilerplate—they intelligently identify what to test, adapt to your code structure, and fill in the gaps that human-written tests often miss. The result is faster test coverage, fewer blind spots, and less time wasted on test maintenance.

7 methods to improve unit test coverage

Whether you're testing manually or leveraging AI to accelerate the process, improving test coverage still requires an intentional strategy that leverages automation.

Here are seven practical methods to help you build smarter, more effective test suites that actually catch issues before they hit production.

1. Audit your current unit test coverage

Start by asking and answering this question: what works for us, and what do we currently have? Before adopting a new approach, you need to understand your current position, identify the gaps and use coverage reports from tools like coverage.py (Python), Istanbul.js (JavaScript), or Jest for modern JavaScript testing frameworks.

Don’t just look at overall coverage percentages; dig deeper. Are critical functions tested? Are all branches of decision-making logic covered? A high percentage might be misleading if tests focus on easy paths while ignoring complex logic.

2. Prioritize high-risk code paths

The truth is that not all codes need the same level of testing. Focus on testing high-risk or critical areas like payment processing, data validation, access controls. Proper control measures ensure that users can only access authorized features, and anything else that, if broken, would cause significant issues.

For example, what happens if an attacker repeatedly tries different usernames and passwords on your application? Can the system automatically lock the account for some time and carry out security checks later?

3. Cover edge cases and boundary conditions

A function may work fine for typical values but fail when given an empty string, a null value, or an extremely large number.

Take a login system that requires an email and a password. What happens if the email field is empty? What if the password has only one character? Testing these edge cases helps prevent failures in production.

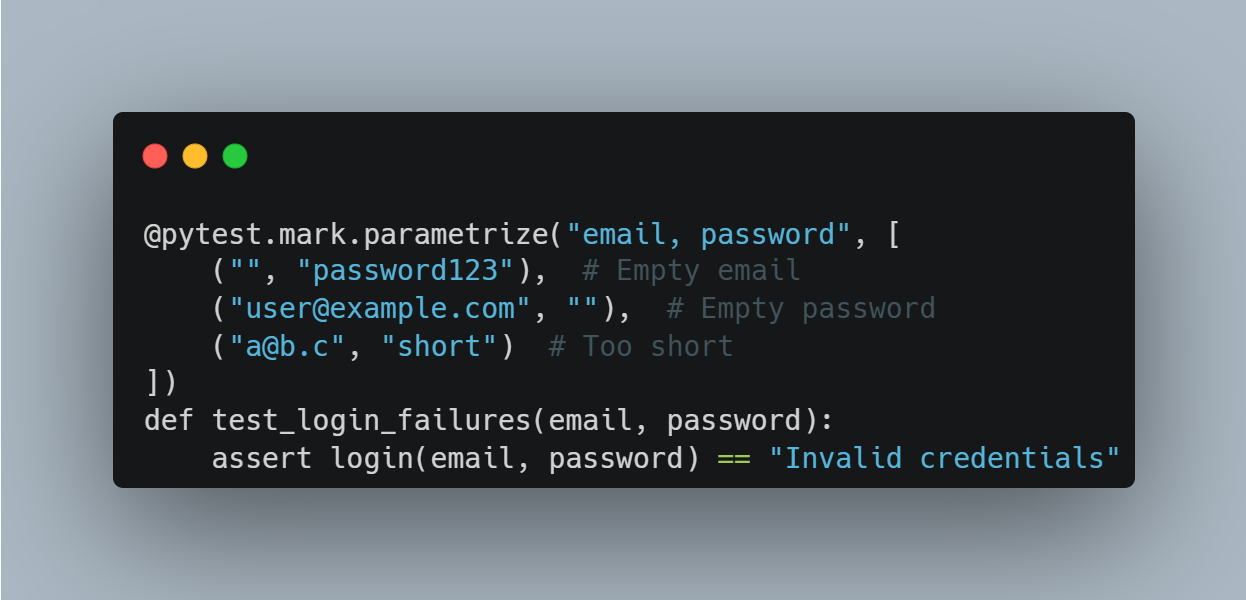

Using data-driven testing can help automate this. For instance, in Python with Pytest:

Data-driven test using Pytest to validate login failures across multiple edge cases.

This test checks if the login system can block incorrect logins. It tries logging in with different bad inputs, like a missing email, an empty password, or a very short password.

Each time these bad inputs are tried, the system is expected to say "Invalid credentials." If bad logins go through, the test fails, meaning the system isn’t correctly checking login details.

4. Leverage parameterized testing for broader coverage

Using parameterized tests allows you to test a function with different inputs multiple times, instead of writing separate test cases for each one. Some AI test automation tools even support generating these variations automatically, helping you scale test coverage without bloating your test files.

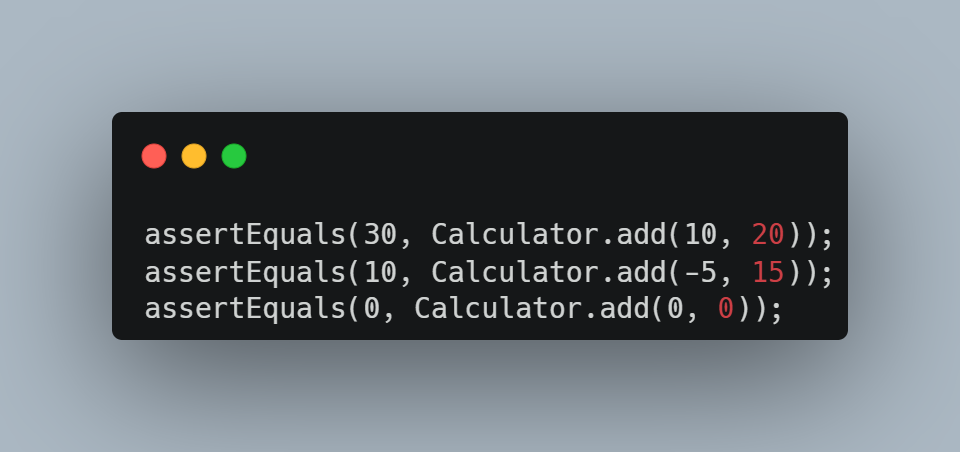

For example, if you're testing a calculator's addition function, instead of writing multiple individual test cases like:

Inefficient approach: multiple hardcoded test cases for the same function.

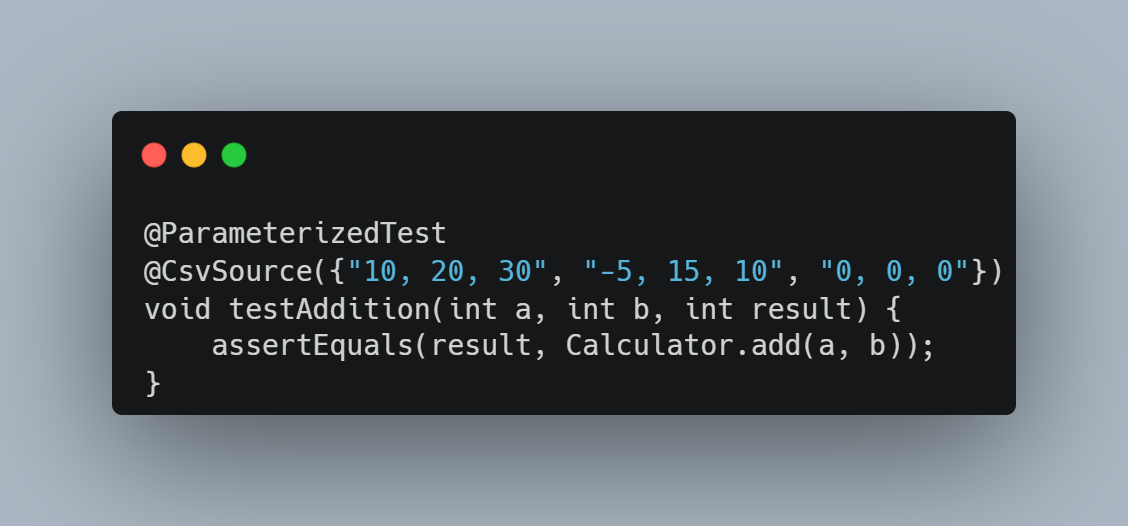

You can use parameterized testing to define a single test that runs with different values, like this:

Clean approach: parameterized test function using multiple input values.

This ensures the function is tested with multiple cases efficiently, improving test coverage while keeping the code cleaner.

5. Use mocking and stubbing correctly

Writing unit tests aims to achieve a reliable and independent system. However, if your code depends on external systems like databases or APIs, tests can be slow or unreliable if those services are down.

With mocking, you can create a ‘fake’ version of these services that returns results instantly.

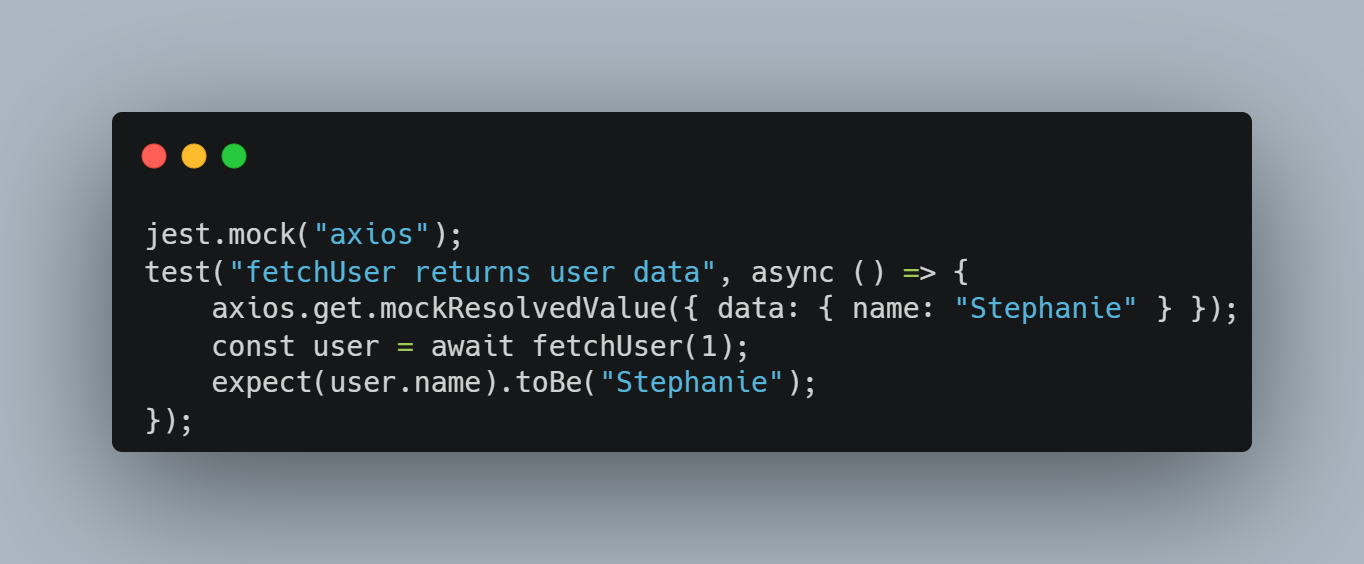

For example, instead of calling a real API to fetch user data, you can mock the response to return a fake user like "Stephanie." This allows you to test if your function handles the response correctly without calling the API.

Mocked API response in Jest to simulate user data without external dependencies.

This test mocks an API call using Jest and axios. Get a fake user named Stephanie, then check if the fetched user's name is Stephanie.

6. Enforce coverage in CI/CD pipelines

To keep test coverage consistent, integrate it into your CI/CD pipeline to automatically check every code change.

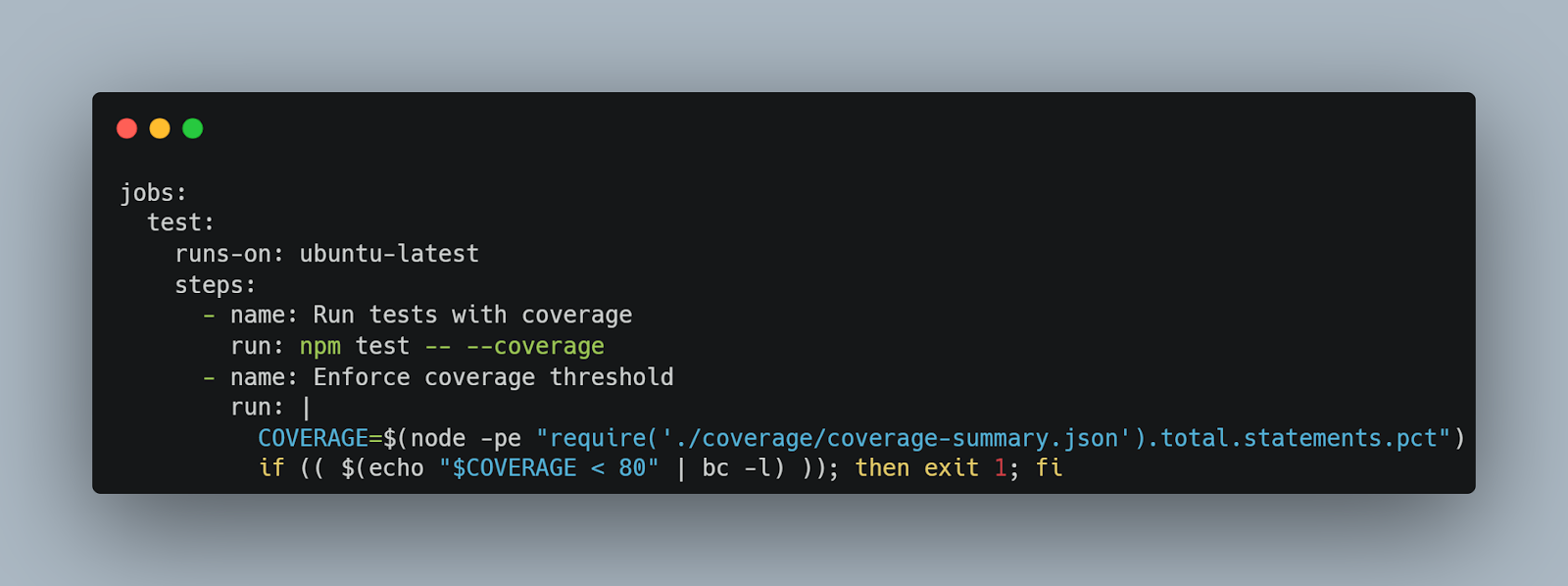

Set a minimum coverage threshold (e.g., 80%), ensuring that the build fails if coverage drops below this level and the code cannot be merged. Automate test coverage enforcement by setting minimum thresholds:

GitHub Actions workflow enforcing minimum test coverage threshold before merging.

This GitHub Actions workflow runs tests with coverage and fails the build if the test coverage is below 80%.

How to improve unit test coverage

7. Automate test writing with AI

Manual test writing doesn’t scale—especially across large, fast-changing codebases. Agentic AI changes the game by enabling tools to reason through logic, decide what needs testing, and adapt as your code evolves.

EarlyAI is a Visual Studio Code extension that brings this to life. It generates and maintains validated unit tests for JavaScript, TypeScript, and Python—automatically. Unlike traditional code helpers, it behaves like an autonomous agent: identifying coverage gaps, updating tests as your logic shifts, and surfacing potential bugs before they reach production.

It generates both green tests (to lock in correct behavior) and red tests (to expose potential bugs), letting you offload test maintenance without sacrificing quality.

More intelligent testing, not just more testing

Manually writing tests is old-school and tedious. Developers should spend more time building than writing tests or debugging failures. Improving unit test coverage isn’t about chasing 100%. It’s about writing meaningful tests that catch bugs, reduce debugging time, and enhance software reliability.

Tests are necessary, but who says you must do them yourself? The above methods help you create a test suite that catches real issues before they reach production.

Adopting AI-powered tools like EarlyAI allows you to achieve adequate test coverage without wasting time on vanity metrics. However, AI is not here to replace your human expertise; it saves you valuable time, money, and effort. With agentic AI, you're not just accelerating tasks—you’re offloading cognitive load to systems that understand, plan, and execute with minimal input.

Start by implementing one or two of these methods today, and if you need help writing unit tests, let EarlyAI get the work done for you. Watch or Book a demo.