How AI Agents Enable Faster, More Complex Software Development

Imagine this: It’s late at night, and after hours of development, you’re about to push a major update live. But, a nagging thought lingers—have you really caught every potential bug? Am I creating the next CrowdStrike crisis? For most developers, this uncertainty is part of the job. But what if it doesn’t have to be?

AI assistants for test code generation have become essential for such moments, helping developers comb through code and identify bugs. They’re reliable helpers, but they’re also limited. They offer basic support, following instructions, but never going beyond what they were specifically prompted to do.

But the next generation of AI is different. It doesn’t wait for specific instructions; it acts independently. Welcome AI agent for test code generation - a technology that tests your code at speed and quality beyond your reach, and safeguards your code without you having to ask. An AI agent transforms your late-night worry into confidence by proactively protecting your code from bugs and errors.

As the founder of Early, I believe in empowering developers to focus on building applications. With an AI agent handling the complexities of unit tests, developers would deliver higher-quality code without losing sleep over bugs slipping through.

Setting the Stage: AI Assistants and AI Agents Explained

AI assistants are digital tools powered by artificial intelligence designed to help users perform tasks more efficiently. They leverage machine learning and natural language processing to understand context and deliver relevant responses or actions, often based on patterns learned from vast amounts of data.

For example, AI assistants can assist with writing by suggesting phrases, summarizing content, or rephrasing sentences, similar to how they help with code suggestions for developers. They can also support productivity by automating routine tasks, scheduling, and even answering questions or conducting research.

An AI agent, on the other hand, is an autonomous software program that can perceive its environment, make decisions, and take actions to achieve specific goals or complete tasks. Unlike AI assistants, which often require ongoing user prompts or inputs, AI agents operate with a higher degree of independence. They continuously gather information from their surroundings, process it, and act based on pre-defined objectives, adapting their behavior over time.

Examples of AI agents include customer support chatbots that handle inquiries without hands-on human intervention, next-level recommendation engines that suggest relevant content or products based on user behavior, and agent-powered robotics that navigate and interact within physical spaces. More advanced agents are even capable of coordinating with other AI agents to achieve more complex goals collaboratively.

The key distinction of an AI agent is its autonomous functioning and ability to operate proactively toward goals. While autonomous, AI Agents can also adapt based on directional feedback from its environment and achieve even more accurate results to the intent of the operator.

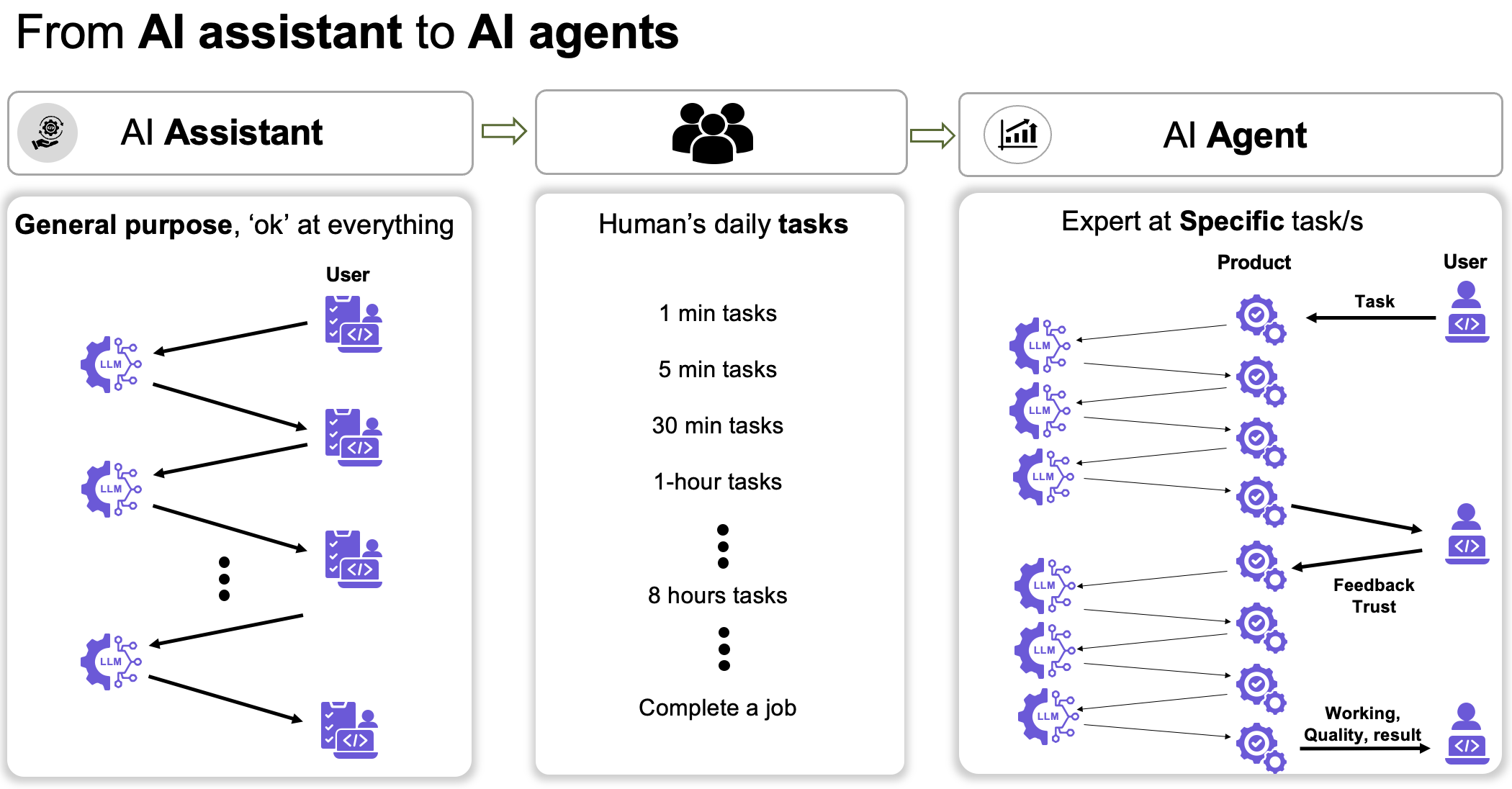

In the image below, you can see that, in the case of an AI agent, the user assigns a task to a system or product, which then interacts with large language models (LLMs) and other systems and data sources multiple times until it reaches a complete outcome.

AI Assistant and AI Agents for Test Code Generation

When looking at GenAI DevTools for test code generation and deciding how to implement and use them, it’s important to determine whether you need an AI assistant or an AI agent. AI assistants provide test code recommendations based on developer prompts. Then, interactive feedback and iterations allow to gradually refine it.

An AI assistant’s test code output is often generic and requires significant manual adjustments until the code is usable. This approach is helpful for small, low-stakes tasks but can be cumbersome for larger, more intricate projects.This “nice-to-have” approach isn’t reliable or robust enough for a CrowdStrike-scale incident, or for any organization relying on GenAI for their testing foundation or as a reliable replacement for the time developers spend on code quality related tasks (about a third of the time).

AI agents for test code generation are a whole new ball game. Once a developer assigns the agent a task, the AI agent interacts with the underlying model to analyze data and autonomously produces a workable test code output.

The result is not just code that is a reliable and executable test, it also often exceeds human capabilities in quality, speed and scale. Agent-generated test code is also comprehensive, covering not just standard cases but also happy paths, edge cases and nuanced scenarios as well. The outcome is that these generated tests immediately protect users from bugs (Green - passing unit tests) or often, and even more desirable, finding bugs (Red unit tests).

This approach allows relying on GenAI for creating high-quality test code that can find bugs, without requiring close human involvement or being explicitly told what to do and how to fix the test code. Developers can forgo testing almost altogether, freeing them up to focus on building innovative applications and bringing faster value to the business.

In the future, the AI agent approach will advance even more, to an “agent of agents” model. In this setup, a complex task is divided into smaller-scale tasks. Simpler agents will handle these simpler tasks in parallel, passing results to other agents or developers, until the task is complete. This approach yields faster results by breaking down and resolving complex problems in a collaborative network—a potential game-changer in comprehensive test coverage and continuous integration pipelines. But this is a future endeavor, so let’s revert back to it in a few months…

A Look Into the Ecosystem

Let’s take a look at an example of GenAI assistants vs. GenAI agents in test code generation through an example of unit tests. Unit tests help catch bugs at their lowest cost, preventing costly downstream issues. They also accelerate release cycles through automated test generation. Finally, they improve code coverage across various use cases during development. This means that using GenAI to create and run them has the potential to transform code quality, if done correctly.

Currently, some of the most prominent GenAI coding assistant tools in the space are GitHub Copilot and Cursor, with newer companies raising enormous to join the party. Indeed, they take away some of the manual work carried out by developers when scripting unit tests, driving productivity.

However, their work requires extensive review before compiling. Once a test code is generated, developers need to manually and meticulously review and check it, scouring through the assistant's suggestions one at a time and manually determining whether to accept them. This high maintenance approach is hardly seamless, scalable, or a game-changer.

On the other hand, an AI agent-driven tool, like Early, which can be easily added to the IDE, generates hundreds of running tests in minutes or thousands in less than an hour. These tests are easy to manage in bulk, provide up to 70% code coverage in under an hour for a popular OSS project ts-morph, and include both red and green tests with a minimal number of clicks required from developers. This is a feat that’s impossible to achieve with an assistant-based approach.

The Value of AI Agents for Developers Writing Test Code

For developers, the difference between assistant and agent is the difference between suggested code and workable test code. Let’s compare the two approaches:

1. Output: Suggestions vs. working code - Assistants perform incremental work of suggesting code that requires a review, while Agents produce working, or much closer to working code.

2. Quality: Comprehensive tests - Agents generate comprehensive test cases that are ready to use. They also cover a wider range of scenarios, including edge cases, happy paths and complex mocks. This is opposed to medium to low quality test code by AI assistants, which requires extensive work from developers until the tests perform.

3. Developer Productivity - AI assistants help save time by generating code automatically. However, iterations and low quality outputs take up developer time, and can ultimately lead to foregoing the outputs, resulting in wasted time. Agents, on the other hand, actually free up developers for other tasks by automatically generating test codes that work.

4. Impact: While AI Assistants’ impact is incremental, one test at a time, AI Agents for test code generation can produce hundreds or even thousands of unit tests in an hour, delivering high coverage at the Pull Request or project level.

Transformative Testing and Development - Testing is one of the most painful developer tasks, per Microsoft's survey “Toward Effective AI Support for Developers”. GenAI agents have the power to fundamentally transform this aspect across the engineering team - making developers’ lives much easier while also generating higher quality test code.

Where Can GenAI Agents Bring the Most Value?

Based on our experience working with developers over decades, we’ve found four ways where GenAI agents can enhance test generation:

1. Continuous Testing During Development - Creating quality unit tests at scale allows testing early and frequently, catching bugs at their source in an easier way than before.

2. Thorough PR Testing - Agents create comprehensive code, ensuring high code coverage before submitting a pull request.

3. Private Method Testing - Agents cover both public and private methods. This allows maintaining encapsulation while identifying hidden bugs across all branches, all while leveraging AI's thoroughness.

4. TDD Done Differently - Engineers love the idea of TDD but hate executing it. Agents make TDD simplified, enhanced and streamlined.

Conclusion

Future Implications: Driving High-Quality Code Faster and More Efficiently

With AI agents, testing evolves from a complex, painful and resource-intensive process to an almost automated step in the development pipeline. For developers aiming to become “100x engineers”, using AI agents “test engineer” as part of their development workflow is key to easily delivering high-quality, reliable software at scale. This can make the difference between a smoothly running service and a catastrophic, CrowdStrike-level failure.

About Early

Try Early yourself—installation takes less than a minutes, and it’s free to start