API Security Is Still Tested From the Outside

It’s Time to Verify Endpoint Security in Development

Table of Contents

Executive Summary

Many API security failures aren’t caused by unknown vulnerabilities or sophisticated attacks. They stem from implicit assumptions, authorization rules and data-access boundaries that were never explicitly tested or continuously verified as systems evolved. As API surfaces expand and AI accelerates change, security approaches that rely on post-deployment discovery struggle to keep up. This article argues for treating endpoint security as a testable property of the system, validated through development-time unit and integration tests that evolve alongside new and modified APIs.

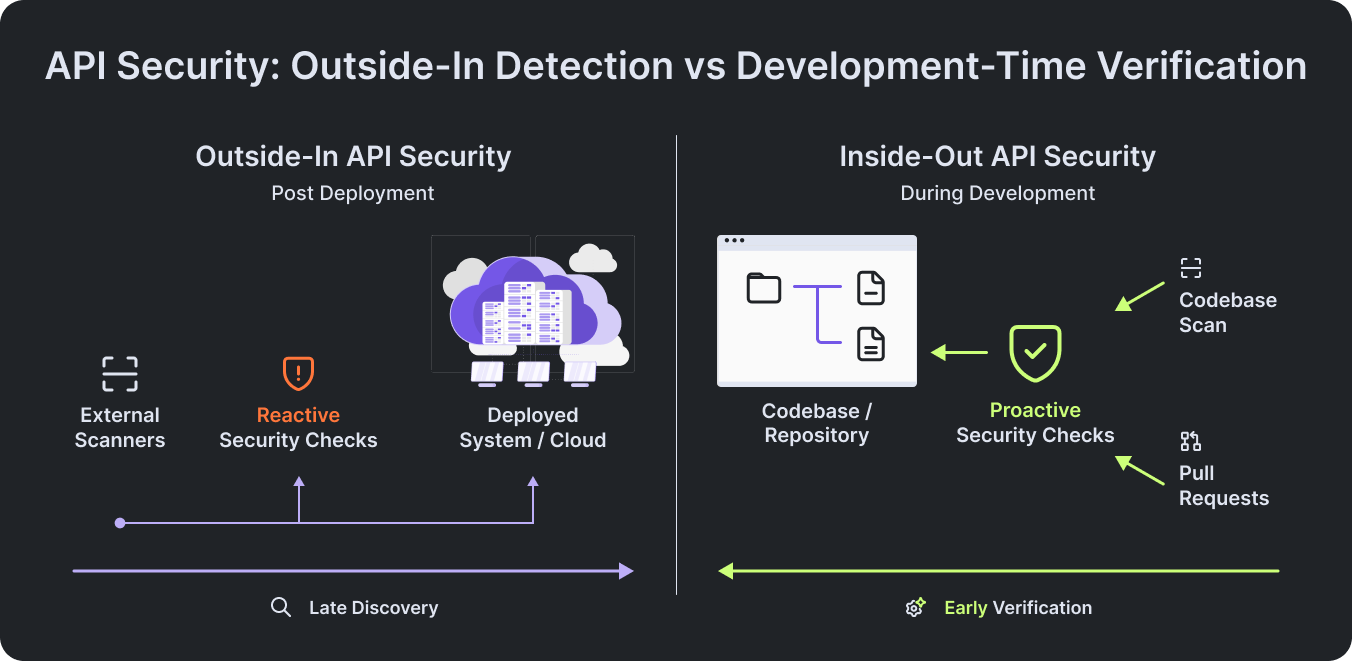

API security is still tested from the outside, after systems are deployed and behavior is already exposed. Most security tools evaluate what an attacker can do to a running system, not what the system was intended to allow. As a result, many API incidents stem from untested assumptions in code rather than broken defenses. Real protection starts by validating security intent where endpoints are defined, not after failures appear in production.

The Tea App incident, where a Firebase bucket remained publicly accessible, is just one example. No exploit was required. No clever bypass was discovered. The endpoint was open because nothing in the development process asserted that it must not be.

This pattern reflects a systemic failure across engineering, security, and process. At its core, it’s a failure of how we think about API security during development.

Most organizations test API security after the system exists. Once services are deployed and traffic flows, teams rely on penetration tests, dynamic and static scanners, or bug bounty programs to identify risk.

These practices are necessary, but they share the same limitation: they evaluate observable behavior, not developer intent. They can tell you what an attacker can do to a deployed system, but they cannot tell you what the system was supposed to allow in the first place.

By the time an issue is found, the code has already shipped, long after the pull request where the risk was introduced, and PR coverage provides a more practical signal of quality to help expose that risk earlier. The context of the change is lost, ownership is unclear, and fixes are reactive. What appears to be a security failure is often a timing failure.

Security is being validated too far downstream from where the most important decisions are made.

Outside-in API security focuses on observing exposed behavior after deployment, testing running systems to see what can be accessed or exploited. Inside-out API security starts earlier, verifying security intent directly in code, where routes, access rules, and validation logic are defined. The distinction matters because behavior only shows what a system allows today, while intent determines what it should never allow at all.

Every API is explicitly defined in the codebase. Routes, handlers, middleware, guards, and validation rules describe what the system exposes and under what conditions.

That is where access decisions are encoded. That is where assumptions are introduced. And yet, most security testing treats the codebase as opaque, waiting until those decisions surface as runtime behavior.

This disconnect made sense when systems were relatively static and releases were infrequent. It breaks down in modern development environments, where APIs evolve continuously through pull requests.

If an endpoint is introduced or modified in code, that is the moment when its security properties should be verified.

Outside-in security testing answers an important but limited question: what can I do to this system right now?

Inside-out security testing asks something more fundamental: what did we intend this system to allow, and is that intent actually enforced?

Consider a simple endpoint definition:

Is this endpoint public? Should it require authentication? Is it restricted to administrators? Does it rely on middleware applied elsewhere?

Without an explicit test asserting the expected security behavior, the answer is not guaranteed. It exists only as an assumption, in someone’s head, in documentation, or in a code review comment that will eventually be forgotten.

In many API incidents, nothing is technically broken. No exception is thrown. No vulnerability is triggered. The system simply does what it was written to do, even if that was never the intended outcome.

This is a difficult realization for many teams, because it challenges how security incidents are usually explained.

When an issue occurs, the instinct is to look for a bug or a missing check. Often, however, nothing is wrong with the implementation itself. The system behaves exactly as it was designed to behave.

The real problem is simpler: there was never a test that explicitly stated, “This must never be allowed.”

Authentication rules, authorization logic, input validation, and data exposure limits are security contracts. If they are not asserted in tests, they are not enforced, they are assumptions. And assumptions tend to fail silently, until they surface in production.

Modern APIs evolve continuously. Endpoints are added, refactored, and reused through a constant stream of pull requests. Authentication logic shifts, middleware is reused in new contexts, and security assumptions quietly drift as systems grow.

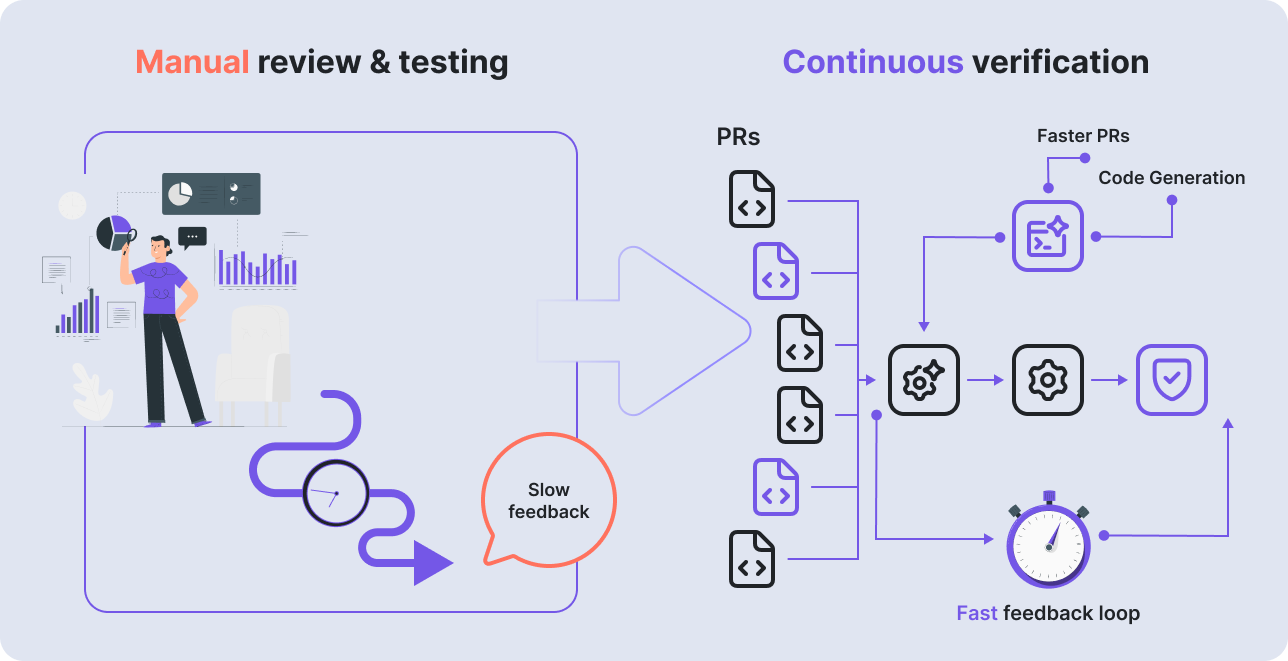

Even without AI, this pace already stretches the limits of manual review and manual testing. Reviewers reason about intent, while ad-hoc tests validate behavior, but neither produces a durable, executable guarantee of what must never be allowed.

AI-generated code compounds the problem, especially when teams adopt AI-generated code at enterprise scale. New endpoints can appear in seconds, often without deep contextual understanding of the surrounding system. The code may look correct and follow established patterns, yet small differences, a missing guard, an incorrect default, a misplaced middleware, can significantly change an endpoint’s security posture.

In this environment, relying on humans to remember which security cases to test, and when, simply doesn’t scale.

It’s important to be clear about what this approach is, and what it isn’t.

Penetration testing, static analysis (SAST), and dynamic analysis (DAST) all play an essential role in API security. They are designed to uncover vulnerabilities, misconfigurations, and exploitable behavior. In practice, however, they operate by observing symptoms, not by validating security intent.

Pen tests simulate an attacker probing a deployed system. They answer the question, “What can be exploited from the outside?” By definition, they run late in the lifecycle, after exposure has already occurred.

SAST analyzes code patterns to detect known classes of vulnerabilities. It flags risky constructs, but it does not understand business context or authorization intent. It can tell you that a check exists, not whether the check enforces the right rule.

DAST evaluates runtime behavior by exercising live endpoints. Like pen tests, it reasons about observable behavior, not about what the system was meant to allow.

What’s missing across all three is a way to assert and continuously verify security guarantees as part of development.

At its simplest, this approach can be thought of as security-focused unit, component, and integration tests for every API endpoint. These tests encode authorization rules, data-access boundaries, and exposure constraints, and that are continuously updated and enforced as the codebase evolves.

This doesn’t replace pen tests or analysis tools. It complements them by shifting left the most fundamental security guarantees upstream, from post-deployment discovery to development-time enforcement.

In security terms, it adds a preventive layer where today we rely heavily on detective controls.

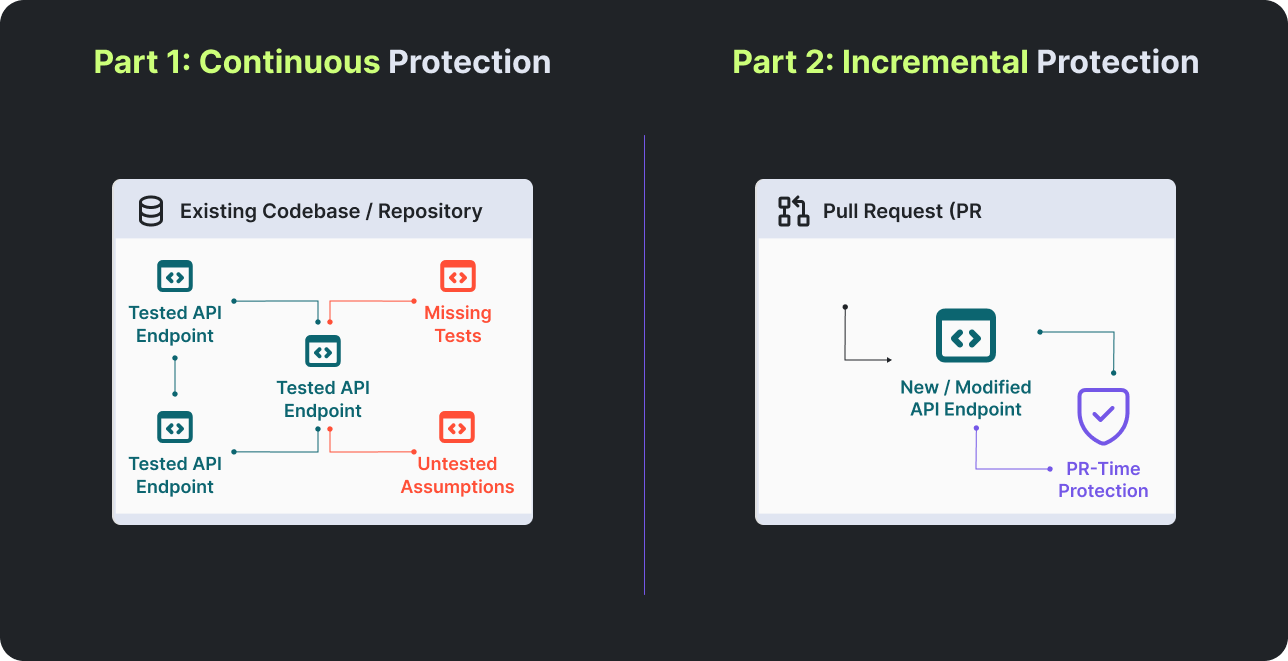

Protecting endpoints during development changes how teams think about API security. Instead of discovering issues after deployment, security becomes something that is enforced continuously as the code evolves.

The first shift is from periodic audits to continuous protection of the existing codebase. Endpoints that lack explicit security tests represent unverified assumptions, even if they have been in production for years.

The second shift is toward incremental verification. Every pull request changes the API surface in small but meaningful ways, adding an endpoint, modifying access logic, or refactoring middleware. Security tests must adapt to those changes by introducing or updating tests as part of the development process.

Together, these shifts turn security from an external checkpoint into an internal guarantee.

For years, teams have understood that security should be tested early. What made this difficult wasn’t intent, but feasibility. Encoding security guarantees as tests, and keeping those tests aligned as systems evolved, required constant human effort. As a result, many authorization and data-access rules remained implicit and inconsistently enforced, a gap that approaches like the Early Quality Score (EQS) are designed to surface by focusing on confidence rather than raw test counts.

That constraint is now changing.

A new class of AI-driven development agents is emerging, agents that operate directly on the codebase and continuously generate and update security-focused tests as endpoints change. Instead of relying on human memory and manual reviews, these agents make security intent executable and keep it enforced as code evolves.

At its simplest, this looks like security-focused unit, component, and integration tests for every API endpoint, created and maintained automatically, and revalidated with every code change.

This shift matters not because AI is “smarter,” but because it makes continuous, preventive enforcement operationally possible. As development velocity accelerates, security posture that relies on post-hoc detection will always lag behind change.

Development-time API security combines continuous protection of existing endpoints with incremental verification on every pull request, ensuring security guarantees evolve alongside the codebase

Security incidents are often treated as surprises. In reality, many of them are the delayed consequences of assumptions that were never tested.

As API-driven systems continue to scale, security can no longer rely on discovering what went wrong after deployment. The future belongs to teams that treat authorization and data-access rules as first-class, testable guarantees, enforced continuously, where systems are built.

In the next era of software, security won’t be something you discover. It will be something you can continuously prove in code.