How to Embed AI Test Code Generation Agents Into Your PR Workflow and protect from Regression

Table of Contents

6 Steps for Safely Changing Existing Code while using Test Code Generation Agents during your development

Embedding AI test code generation agents into a pull request (PR) workflow means using autonomous agents to generate, validate, and maintain tests around risky code, before, during, and after a PR is opened, so teams can protect from regression when modifying existing systems.

In enterprise codebases, the biggest risk isn’t writing new code, it’s changing behavior that isn’t fully understood, explicitly tested, or actively owned. Coverage metrics may look healthy, yet still fail to provide real confidence. Test code generation agents reduce this uncertainty by freezing existing behavior, validating changes incrementally, and focusing test quality on the actual risk introduced by each pull request, using autonomous test code generation on real production codebases.

Software development is changing, not because developers are writing less code, but because they’re increasingly supervising AI-generated code and systems that generate and validate a lot of code.

Test code generation is one of the clearest places where this shift is already happening today.

In practice, most teams aren’t asking “can AI write tests?”

They’re asking something far more practical:

How do we make changes to real codebases without breaking things we don’t fully understand?

This post walks through a concrete, end-to-end workflow for embedding a test code generation agent into the SDLC, specifically to your pull request flow, using a scenario every developer recognizes: adding a feature to existing code, under real constraints, with real risk.

No theory. Just how it fits into day-to-day development.

You’re assigned a task:

“Add a feature to this existing service.”

The code:

- Was written a week or maybe years ago

- Has partial tests

- Shows decent coverage (at best), but no one really trusts it

At this point, most developers:

- Skim the code

- Run tests

- Check coverage

- Hope nothing breaks

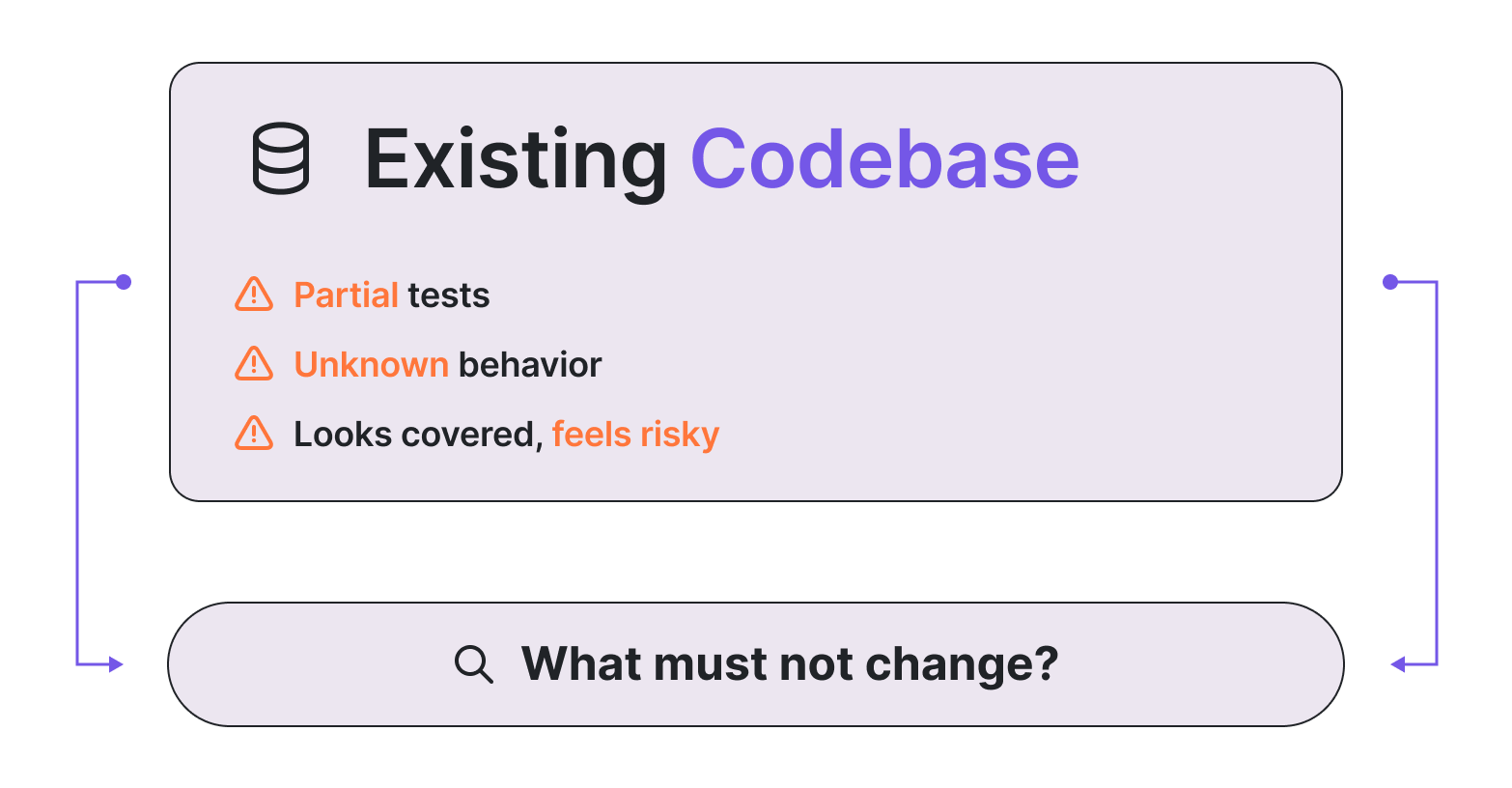

The problem isn’t just lack of tests. The problem is unknown behavior.

Before changing anything, you need to answer one question:

What behavior must not change?

This workflow addresses that risk by separating behavior capture from change implementation.

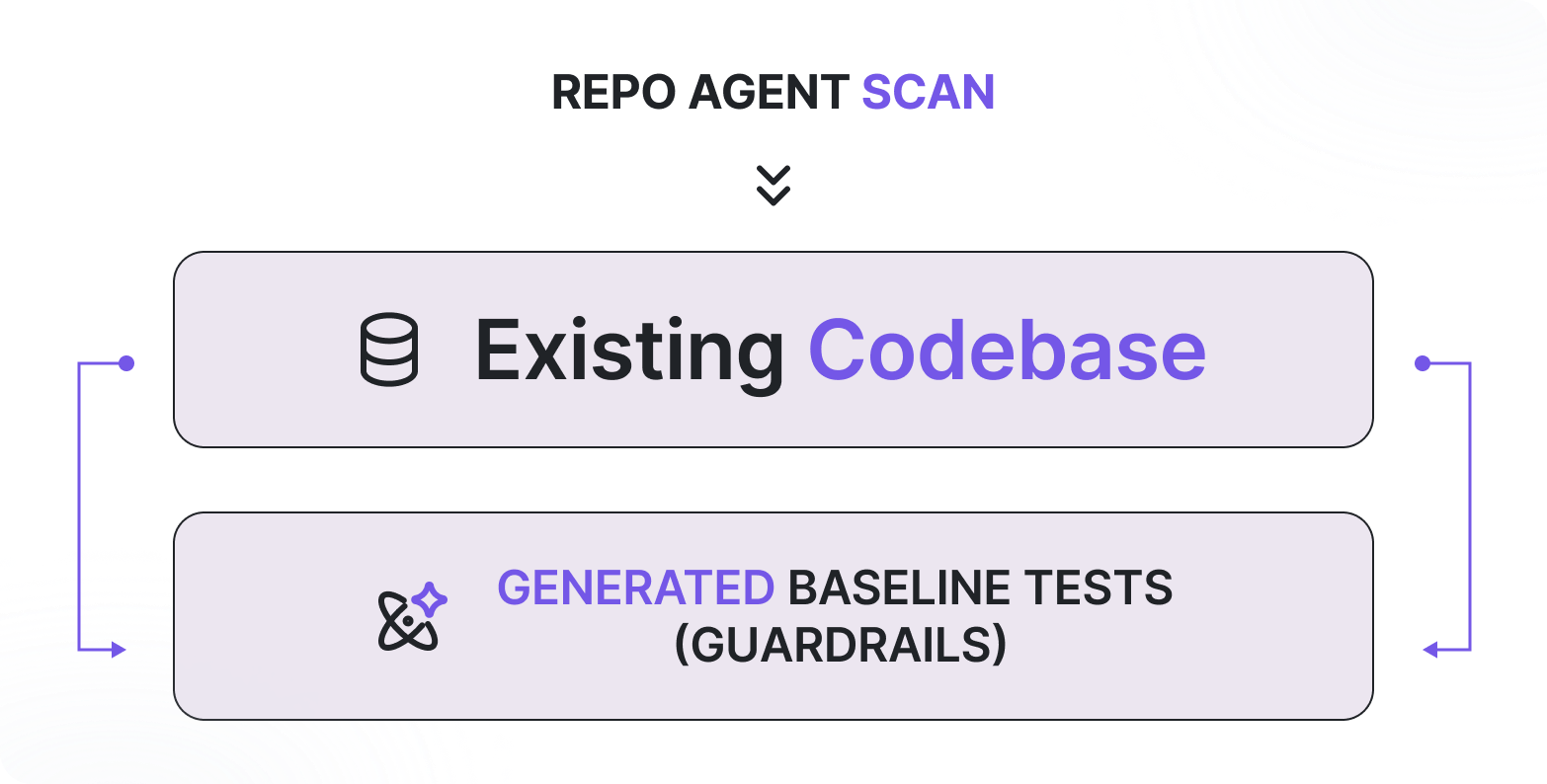

In this workflow, a repo-level test code generation agent analyzes existing code to capture current behavior across relevant modules before changes are made. A PR-level test agent focuses only on the code and logic introduced or modified in a specific pull request.

What a repo-level test code generation agent analyzes

Before touching the implementation, a repo-level test code generation agent analyzes the relevant parts of the codebase and generates tests around the current behavior.

These tests:

- Capture public interfaces and critical logic paths

- Protect implicit assumptions

- Act as a behavioral baseline

- For API-endpoints - generate security, functional, and data related tests

This isn’t about increasing coverage. It’s about freezing behavior before change.

You haven’t improved the product yet, but you’ve dramatically reduced uncertainty.

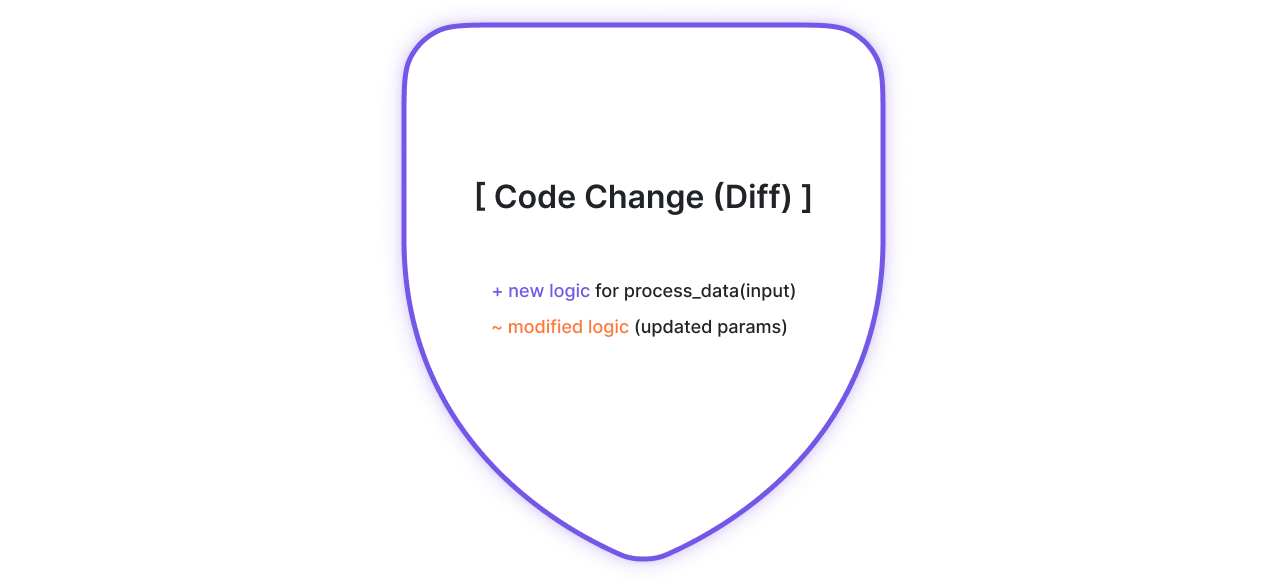

At this stage, you’re only writing code.

- Add new functionality

- Modify existing logic

- Refactor where needed to support the change

The goal here is to express what should change, not to validate impact yet.

You’re capturing intent in code.

Validation comes next.

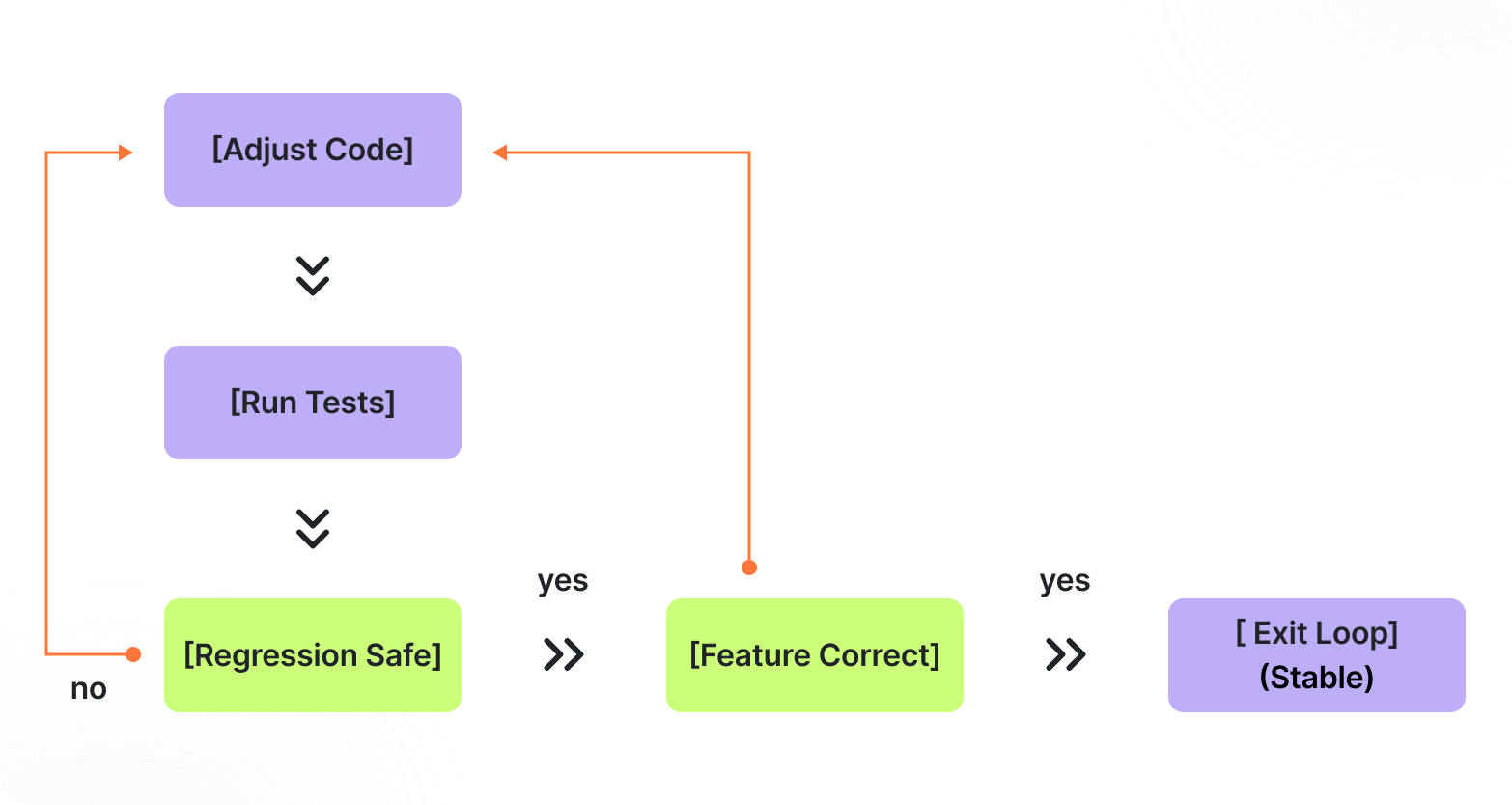

Before opening a PR, now you run the tests.

The first question to answer is whether you broke existing behavior.

You run all existing tests (including those generated in step 2) to make sure nothing regressed.

Only then do you focus on the new functionality and verify that the change behaves as intended.

From here, you iterate, sometimes involving step 5:

you adjust the code and re-run tests until both are true:

- Existing behavior stays intact

- The new functionality works correctly

This is where confidence emerges, not from a single green run, but from convergence through iteration.

Why deleting failing tests creates long-term risk

Some tests may fail after your change. This is expected.

The mistake most teams make is deleting failing tests to unblock progress.

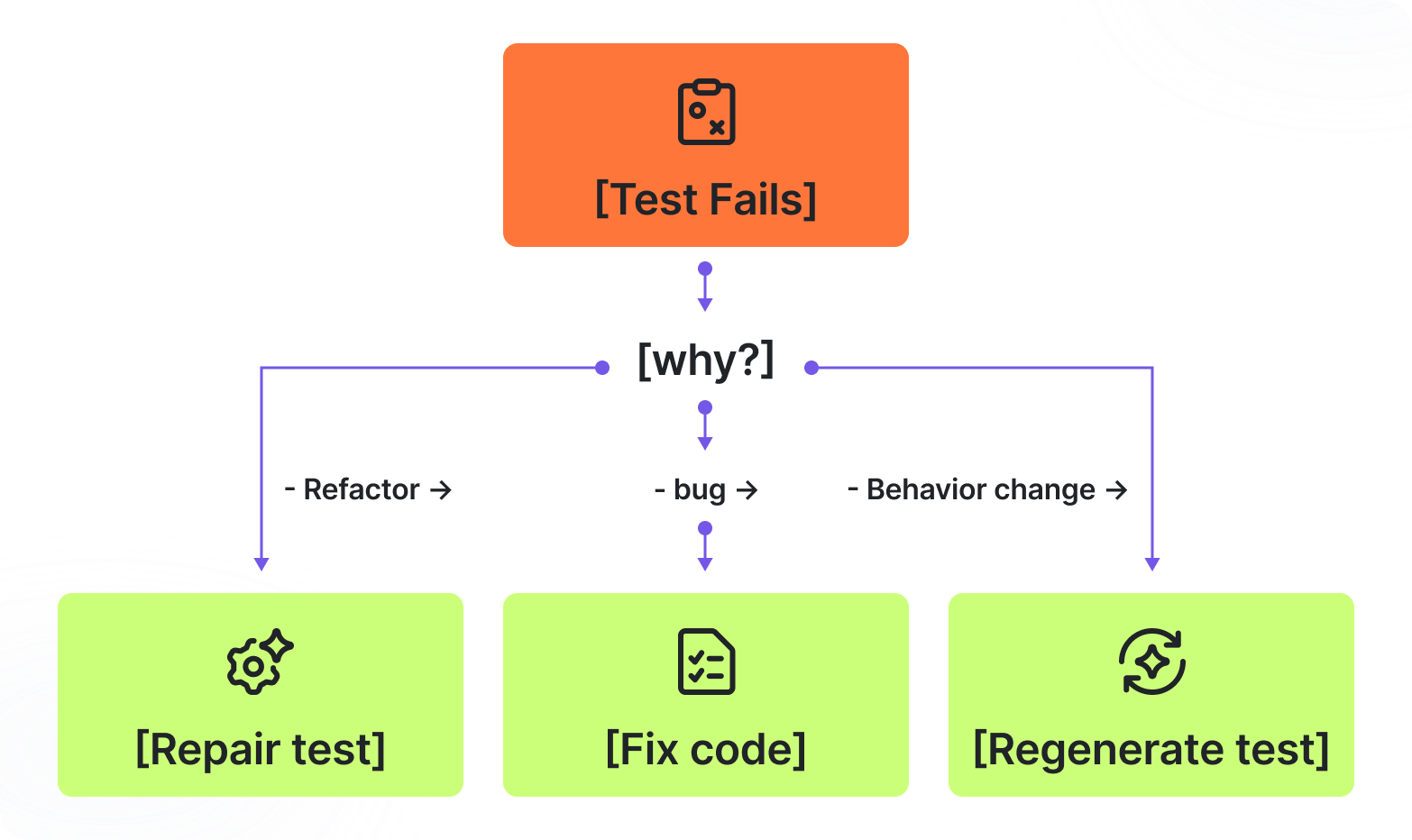

Instead, understand why the test failed and take one of three actions using a tests maintenance or test code generation Agents:

- Repair the test if refactoring changed structure but not behavior

- Fix a bug if the test stays red and reveals unintended behavior

- Regenerate the test if behavior intentionally changed

The key idea:

Failing tests are signals, not noise.

This keeps tests aligned with real behavior instead of letting them rot or disappear.

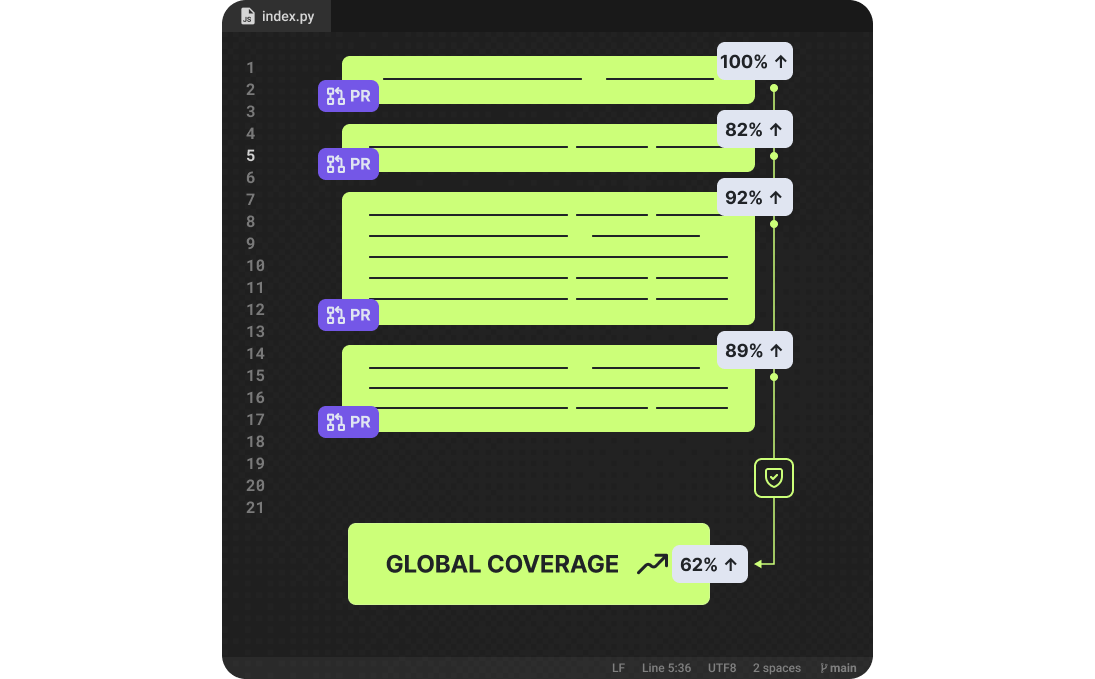

When you open a PR, global coverage numbers don’t tell you much.

This is where a PR-level test agent takes over. And test quality is measured incrementally with the new definition of PR Coverage, which measures test quality and coverage only for the code introduced or changed in the pull request.

The agent:

- Analyzes the new and risky methods with zero or low coverage

- Identifies untested logic introduced in the PR

- Generates tests scoped to the risk areas

This shifts reviews from:

“Coverage looks fine”

To:

“The risky logic in this change is actually protected.”

By the time the PR is merged, you have:

- Tests protecting existing behavior

- Updated existing tests to reflect the changed code

- New tests validating new functionality

- Tests focused specifically on the change

The result isn’t just more tests.

It’s confidence.

Teams notice:

- Faster, calmer PR reviews

- Safer changes to legacy code

- Less fear around “don’t touch that file”

- Healthier test suites that evolve with the code

What’s really changing here isn’t just how tests are written.

It’s how developers work.

The role is shifting from manually producing every line of code

to orchestrating, supervising, and validating agents that do different parts of the work.

Developers still own the hardest decisions:

- What behavior must not change

- When a test should be repaired, regenerated, or trusted

- Whether a failure reveals a bug or an intentional shift

That’s not less responsibility, it’s responsibility at a higher level. Empowering engineers to deliver much more, at faster speed and quality.

Test code generation agents don’t replace engineering judgment.

They give it leverage, exactly where change is risky and confidence matters most.

How does this fit into existing PR workflows?

This approach integrates into existing pull request workflows without changing how teams work day to day. Developers still open PRs, run tests, and merge changes as usual, while test code generation agents operate alongside before, during, and at the end of the workflow to protect existing behavior and surface risk where changes are introduced.

How is this different from simply increasing test coverage?

Coverage measures how much code is exercised, not how well risk is protected. This workflow focuses on freezing existing behavior before change and generating tests scoped to the actual risk introduced in a pull request, rather than optimizing for global coverage metrics.

Is this approach safe for large or legacy codebases?

Yes. In fact, it’s most effective there. By capturing existing behavior before changes are made, teams can safely modify legacy code without fully understanding every dependency upfront, while still preventing unintended regressions.

Will this slow down pull requests or CI pipelines?

No. Teams typically see faster, calmer PR reviews. By catching regressions early and focusing tests on risky changes, the workflow reduces back-and-forth in reviews and minimizes late-stage failures.